You’re worried about the wrong thing with AI

The real risk isn’t superintelligence. It’s consolidation, dependence, and fragility.

TL;DR: The biggest AI risks aren’t superintelligence or sentience. Not yet. The imminent risk is that we’re reorganizing our economy around a handful of AI companies before understanding the consequences.

The four critical risks: (1) Economic monoculture replacing diverse businesses with consolidated platforms, (2) Creative collapse as AI trains on human work without compensation, undermining incentives to create, (3) Cognitive atrophy as we outsource thinking to machines and centralize truth, and (4) Infrastructure strain: energy costs rising 43x for advanced models, driving up electricity bills and climate emissions.

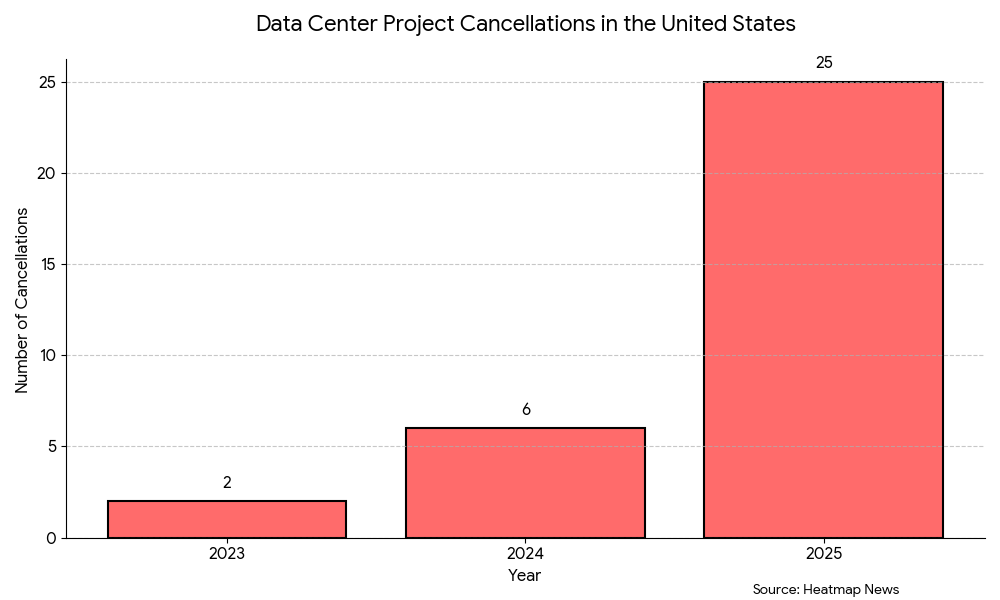

Community resistance is working: $64B in data centers blocked since 2024, mostly in red states. This is where democratic pressure matters most.

Bottom line: Resilience beats efficiency. We haven’t handed over the keys yet, but we’re close.

Most conversations about AI risks start in one of two places: superintelligence and alignment (will AI wipe out humanity like the Matrix or Terminator?), or water use and its effect on surrounding communities.

These concerns are valid. Alignment, environmental costs, and the shitty business practices of AI companies gobbling up other people’s work all need solutions soon.

But we need to triage our concern. More immediate failures are unfolding right now, and we won’t get the chance to debate long-term hypotheticals if we don’t address them first.

The uncomfortable truth is that many of the most impressive AI tools today are also the most dangerous in this respect, precisely because they’re so empowering. They lower barriers. They feel democratic. They make individuals and small teams incredibly capable, both for good and ill. We’ve already seen how scammers and deepfakers can cause maximum chaos with minimal effort. A deepfake robocall targeting New Hampshire voters cost $1 and took less than 20 minutes to create, while scammers need as little as three seconds of audio to create convincing voice clones.1 Deepfake fraud losses exceeded $200 million in Q1 2025 alone.2

These tools are genuinely democratizing. That’s both exciting and dangerous. What makes this moment very different from past technological disruptions is that this democratization is happening within an increasingly consolidated infrastructure controlled by a handful of companies. The power is spreading out while the foundation is narrowing. That’s real fragility on an immediate timescale.

The most pressing danger isn’t AI becoming superintelligent. It’s us reorganizing our economy, our minds, and our critical systems around a small number of profit-driven AI companies before we understand the consequences.

I’m not a Luddite. I’m a techno-optimist, if anything. I believe deeply in the democratic power of tech. But we’re currently in a phase of extreme consolidation, where the largest companies have the power to muscle out competition and lock users into products that steadily degrade over time — the so-called “enshittification” cycle that’s affected the entire internet.3 I do believe that, eventually, market and social forces can push those companies to change or collapse. The problem is the damage done before that correction happens, and governments have been resistant to limiting tech monopolies.

In fact, this moment in AI is genuinely fascinating and exciting. Systems like Claude Code & Cowork make programming and agentic AI more accessible to anyone willing to experiment, placing tools into the hands of anyone with an internet connection and the patience to learn. Small teams can wield absurd amounts of power, and it feels like a new tool drops every week that shifts what’s possible.

We’re in a seminal moment with AI. And that’s precisely why we need to be thoughtful about how we move forward. We’re developing printing-press-level disruption with Manhattan Project-level speed. These technologies can disrupt entrenched power structures (e.g., the printing press vs. the Catholic Church in the 16th century)4, but they also come with enormous downstream consequences we only understand in hindsight.

The risks I’m most concerned about aren’t speculative or far off. They’re already unfolding, and they cluster around a few structural failures:

Economic monoculture: AI-driven efficiency replacing diverse local and online businesses and entry-level jobs with a small number of dominant platforms.

Intellectual property and the collapse of creativity: Work by human creators (artists, scientists, and journalists) training their replacements without permission or compensation, reducing incentives to produce new, diverse work.

Cognitive atrophy & centralized truth: Outsourcing reading, analysis, and judgment to machines, weakening our ability to detect errors, manipulation, or bad faith, flattening nuance and competing perspectives.

Infrastructure, where democratic pressure still matters: Energy, water, and grid dependencies growing faster than public oversight or sustainable planning.

I know I’m not saying anything earth-shattering here. We know that these risks are present and emergent. But each of them reinforces the others, creating a feedback loop that rewards consolidation and punishes resilience. Let’s take them one at a time, in no particular order, and game out where we can still push back.

1. Economic monoculture is fragile by design

Economists love using ecosystem metaphors when referring to the economy. AI is, very simply, pushing us toward a monoculture, one with far less ability to adapt to changing circumstances. We’re placing an enormous share of economic activity on the assumption that AI will be the primary engine of future growth.

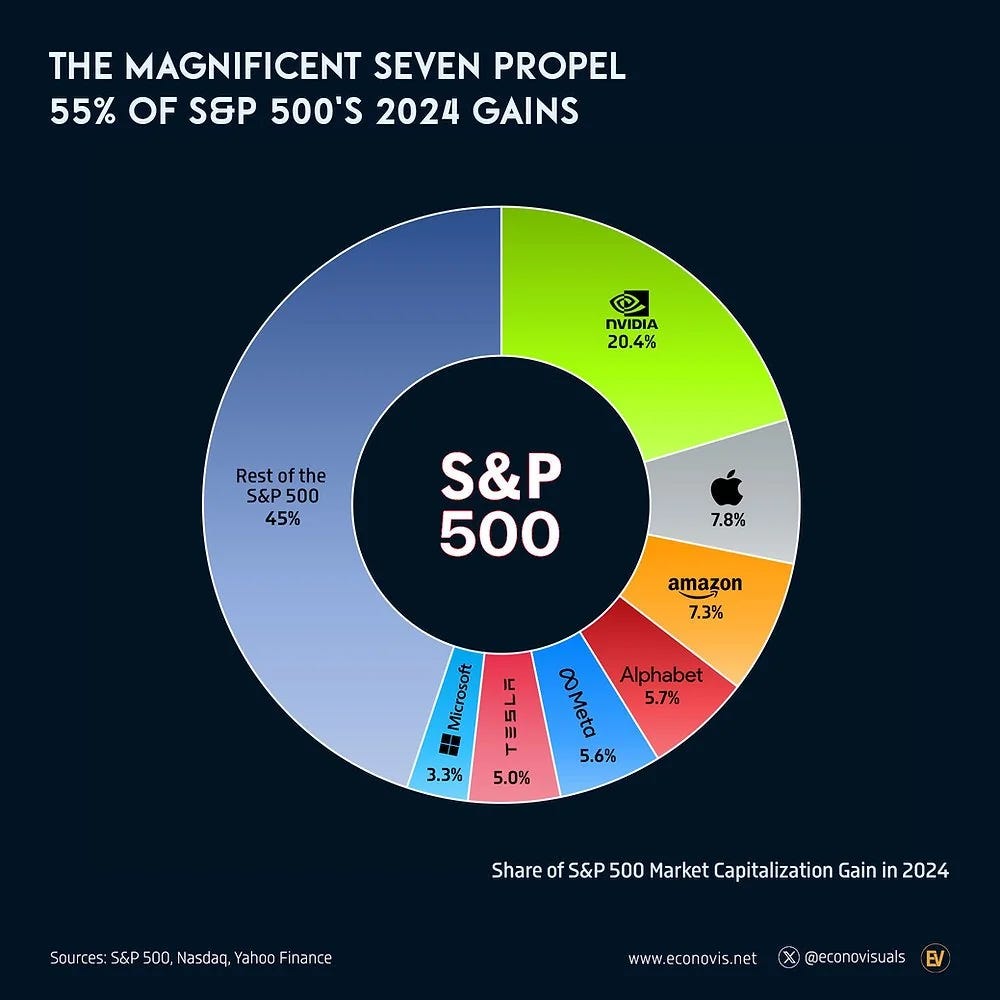

Seven major tech firms (Nvidia, Apple, Microsoft, Alphabet, Amazon, Meta, and Tesla) make up roughly a third of the S&P 500’s total value,5 up from just 12% a decade ago. “The Magnificent Seven” alone accounted for roughly 42% of the S&P 500’s total return in 2025,6 with tech and communications stocks combined driving nearly 60% of all market gains. This isn’t just market concentration, it’s infrastructure concentration. These same companies control the compute, the models, and increasingly, the power grids.

At the same time, small businesses and many entry-level white-collar jobs are disappearing at alarming speed. Tutors, agents, consultants, and recent graduates are finding entire career paths evaporating. SignalFire found a 50% decline in new role starts for people with less than one year of experience between 2019 and 20247 — consistent across sales, marketing, engineering, and design. Whether these were “good jobs” or not is a conversation worth having, but not relevant for this argument. Deployment is outpacing deliberation, fueled by enormous amounts of venture capital and competitive pressure from OpenAI, Meta, Alphabet, and xAI.

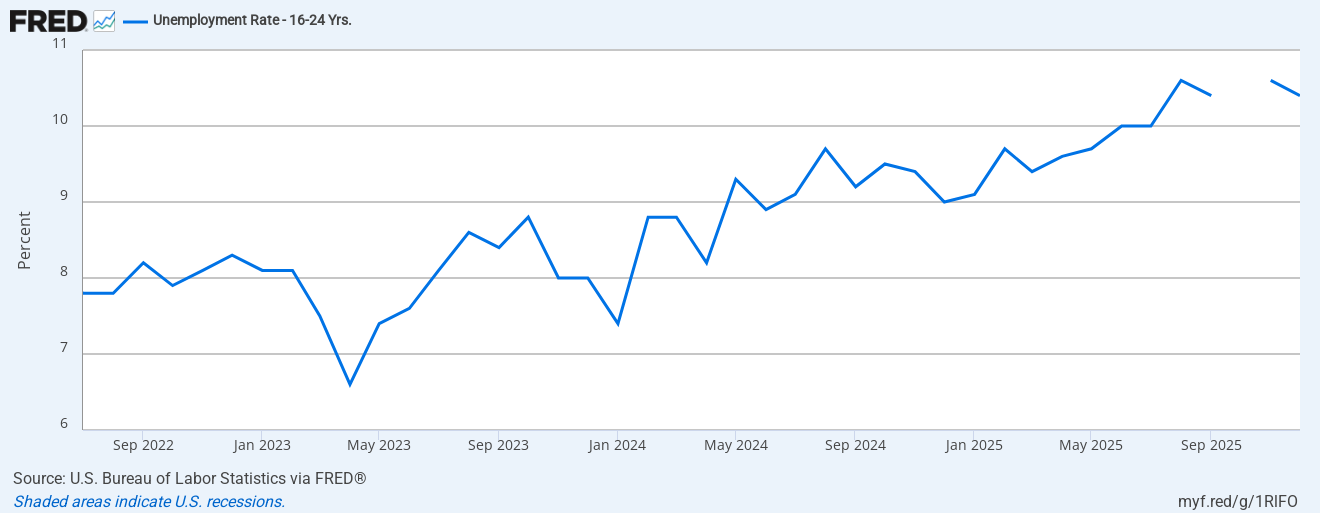

Youth unemployment has long been a canary in the coal mine for broader economic stress, and it’s rising fast, hitting 10.5% for workers under 24 in August 2025, the highest since early 2021.8 If you break it down by types of work, those stats become even more alarming: For the first time, unemployment among young college graduates is rising faster than among those without a degree, exactly the opposite of what we’d expect in a healthy economy.

“Adapt or die” only works if you can get the first syllable out before the “die” part kicks in.

We’ve all played Monopoly, right? When a small number of companies run the show, they have leverage over regulators, customers, and potential competition, and they make the rules. When there’s real competition, market forces can check this behavior. When there isn’t, you get OpenAI co-founder and president Greg Brockman donating $25 million to MAGA Inc., and Jeff Bezos paying $40 million to license Melania Trump’s documentary (the highest price ever paid for documentary rights).910 These aren’t business decisions—they’re protection money.

Economic diversity is insurance against the unknown. It’s the immune system of a healthy economy, capable of absorbing shocks by leaning on varied sectors and skill sets. Hyper-optimized systems perform beautifully under ideal conditions and fail catastrophically when those conditions change. Consolidating large portions of the global economy into a handful of AI companies is a massive bet, one that risks consequences far larger than anything we’ve experienced in recent decades if it goes wrong.

2. Intellectual property and the collapse of creativity

Just as economic monocultures are dangerous, creative and intellectual monocultures carry their own risks. We’re living through a period of global instability and ideological conflict, when pluralism, debate, journalism, and art matter more than ever.

Large language models have proven themselves to be extraordinary synthesizers of information. That’s genuinely valuable. There is far more content in the world than any individual could consume or understand in a lifetime. But these systems are built on the accumulated labor of historians, writers, scientists, artists, and journalists, often without consent, compensation, or credit.

The more powerful this synthesis becomes, the stronger the disincentive to create original work. If your life’s work can be absorbed, summarized, and redistributed in seconds, fewer people will choose to invest years producing it.

We’re already seeing this play out in journalism. CNN’s website traffic dropped 30% compared to the previous year after Google launched AI Overviews in May 2024,11 summaries that extract the value from reporting without sending readers to the articles.

Publishers are stuck: opt out of AI summaries and lose all Google traffic, or stay in and watch your business model evaporate. The economics of investigative journalism depend on people actually reading (and paying for) the work. When AI turns months of reporting into a three-sentence summary, who funds the next investigation?

(I touched on this in my last Substack piece on maintaining sanity & ideals in a divided America.)

The problem extends beyond economics to accuracy. A recent study of nearly 5,000 AI-generated science summaries found that most models overgeneralized research findings—and newer models were worse than older ones.12 When explicitly instructed to “stay faithful to the source material,” AI summaries were twice as likely to contain inaccurate generalizations. One AI summary incorrectly reinterpreted a clinical trial finding that a diabetes drug was “better than placebo” as proof of an “effective and safe treatment”13—a dangerous leap that could lead doctors to prescribe unsafe interventions.

This matters because complex scientific research is being flattened into bullet points that lose crucial nuance. Limitations, uncertainties, and qualifiers disappear. A study on one specific population becomes a universal recommendation. Correlation becomes causation. The more people rely on AI summaries instead of reading the actual research, the less they understand the boundaries of what we actually know, and we slip more into a future where our collective grasp on reality continues to become more tenuous and fragmented.

An acquaintance of mine, Kelly, experienced this firsthand as a visual artist whose style was aggressively absorbed by generative AI models. After decades of making a living from her artwork, she was forced to find another job and joined a precedent-setting lawsuit against Stability AI, Midjourney, and DeviantArt,14 one of the first artists to fight back legally. She wrote an excellent Substack piece about the experience.

Author Brandon Sanderson made a similar point in his "We Are the Art" keynote: the value of creative work isn't just the output, it's the human process that created it. AI models trained on creative labor without compensation don't just copy techniques; they collapse the economic model that makes sustained creative work possible.

Creators inadvertently train their replacements, leading to less human output, narrower training data, and increasingly homogenized content. Compressing visual art, investigative journalism, and scientific research into watered-down versions of themselves creates a cultural monoculture that mirrors the dangers of economic monoculture. It makes societies less adaptable, less imaginative, and more susceptible to manipulation—especially during periods of rapid change.

3. Cognitive atrophy and the brittleness of centralized truth

There’s another knock-on effect of widespread AI adoption that’s already becoming visible, particularly in education. Critical thinking skills, already under strain, are declining faster among those who use AI often.15 LLMs remain “right enough” often enough to inspire confidence, even as hallucinations remain common and occasionally disastrous when taken at face value.

We teach math not because humans can out-calculate machines, but so we understand why answers make sense and can recognize when something is wrong. Outsourcing reading, analysis, and synthesis to LLMs is undeniably faster and often useful. But speed isn’t the core issue. Judgment is.

The danger emerges when we stop checking the machine. Fact-checking, already under pressure from disinformation ecosystems, becomes optional. In January 2025, Meta ended its U.S. fact-checking program, which had supported nearly 160 fact-checking projects, some receiving up to 45% of their revenue from Meta.16 The skill of thinking clearly, difficult even without algorithmic noise, erodes further. As deepfakes improve and signals become harder to distinguish from noise, our diminished ability to spot manipulation becomes a serious vulnerability.

This cognitive atrophy is compounded by another structural problem: as hundreds of institutions collapse into two or three dominant LLMs, truth itself becomes brittle.

That’s an uncomfortably small number of sources if any are compromised or subtly steered. Truth is not just about factual accuracy; it’s often plural. Even perfectly “correct” answers can flatten nuance, erase context, and remove the productive tension that allows societies to function. The allure of clean, bulleted certainty is and has always been strong. And the erosion of nuance seems to be already contributing to rising social polarization.

A healthy society relies on many perspectives: multiple news organizations, civic institutions, and cultural lenses. We don’t need universal agreement. We need the capacity to hold disagreement with empathy. Somewhere between capital-T Truth and total relativism lies reality. Reality lives in the messy middle, and messy doesn’t compress well into tokens. It’s where most of the conversation gets lost, and mistrust grows.

This is how the “cyborgification” of the mind happens: not through malice, but through convenience, shaped by actors whose incentives are not aligned with the public good. We lose the ability to check the machine and the diversity of sources to check it against.

Perhaps most consequentially, these cognitive and institutional collapses are happening while we’re betting our power grid on the companies driving the trend.

4. Infrastructure: Where democratic pressure still matters

The final risk is the most tangible — and the one where public leverage can be most influential right now. Infrastructure, energy, and environmental damage.

The AI issue generating the most hand-wringing overall is municipal water use. AI companies do use significant amounts of water to cool data centers, which is of great concern to farmers and aging local infrastructure systems. (Hank Green’s video is a superb breakdown of how all this works and the most pressing concerns.)

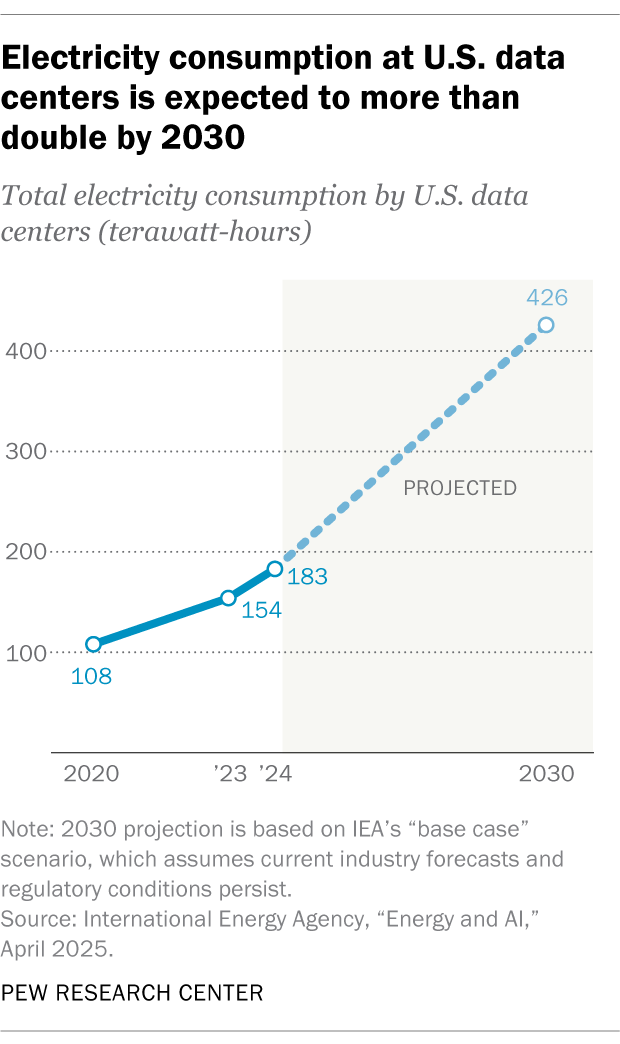

However, while excessive water use is important, the biggest forward-looking infrastructure risk comes from the massive energy costs of AI. Advanced tools like agents, reasoning models (which consume 43x more energy), voice modes, and personalized AI will cause electricity demands to explode over the next few years. This power is largely drawn from grids still dependent on fossil fuels, driving up costs and emissions. In 2024, fossil fuels, including natural gas and coal, made up nearly 60% of the electricity supply for U.S. data centers — and that share isn’t shrinking fast enough.17

The costs to the environment will be devastating in even the most optimistic projections, if you’re pro-breathable air and drinkable water. A report by environmental NGO Food & Water Watch says renewables will cover only 40% of new data center electricity demand by 2030 on the high end. The remaining 60% will continue to come from fossil fuels, equivalent to adding over 15 million gasoline-powered cars to the road each year. That offsets nearly every single electric vehicle sold on the planet, just to support data center growth in the U.S. The report warns that electricity sector emissions in the U.S. could rise more than 56% between now and 2035 due to AI’s fossil fuel dependency, “an unthinkable increase at this stage in the climate crisis.”

And the costs are already showing up in people’s electricity bills. In the market stretching from Illinois to North Carolina, data centers accounted for an estimated $9.3 billion price increase, with average residential bills expected to rise by $18 a month in western Maryland and $16 a month in Ohio.18 One study by Carnegie Mellon estimates that data centers and cryptocurrency mining could lead to an 8% increase in the average U.S. electricity bill by 2030, potentially exceeding 25% in the highest-demand markets.19

We are betting that massive data centers and expanded energy infrastructure are the key to economic success and AI dominance, a hypothesis that remains completely unproven. These are enormous private bets, and our climate, ecosystems, and public grids are part of the stake. When power companies prioritize data centers over residential needs, or when local grids buckle under new demand, regular people pay the price in higher bills and potential blackouts. And the long-term climate forecast worsens. More storms, less biodiversity, and ecological collapse.

Community pushback

At least 16 data center projects worth a combined $64 billion have been blocked or delayed since May 2024 due to local opposition. Data center cancellations quadrupled in 2025, with 25 projects canceled due to community resistance, up from just 6 in 2024 and 2 in 2023.20

These aren’t just blue-state NIMBY victories. Most cancellations were in red states like Kentucky and Indiana, in counties that voted for Trump by more than 20 points. In September 2025, Google withdrew a 468-acre data center proposal in Indianapolis minutes before a city council vote, after hundreds of residents packed the meeting in opposition, requiring two overflow rooms.21 Even my famously pro-business home state of Georgia has become “ground zero” in the fight to ban data centers.22 In a starkly divided era, this issue fosters agreement across the board.

It’s bipartisan because concerns driving this resistance are practical, not ideological: water use is cited in more than 40% of contested projects, followed by energy consumption and higher electricity prices.23 People aren’t opposed to technology. They’re opposed to bearing the costs of Silicon Valley’s bet using our infrastructure.

This is an area where democratic pressure can most effectively shape outcomes right now. Politicians approve or deny these projects, and public demand for renewable energy can mitigate environmental damage while keeping power affordable. Unlike the economic and cognitive shifts already underway, infrastructure decisions are still being made in public view. Which means we have the best chance of affecting them.

The vulnerability window is now

AI companies are not primarily aligned with human flourishing or institutional stability. “Move fast and break things” is a poor philosophy when what replaces broken systems is shallow, fragile, or extractive.

These companies are designed to maximize profit, growth, and investor returns. Regulatory guardrails, where they exist, are treated as obstacles to be circumvented. Google, Meta, and OpenAI mobilized against California’s SB 1047 safety bill, arguing it would “hamper innovation and undermine American competitiveness,” and the bill was ultimately vetoed.24 OpenAI has even lowered its internal safety standards, now willing to release models with “high” or “critical” risk if competitors do so first —abandoning its previous commitment not to release models above “medium risk.”25 Meanwhile, the narrative of an AI arms race is weaponized to justify regulatory inaction: executives like Sam Altman and Eric Schmidt have warned Congress that regulation will benefit China, while researchers document how this “arms race framing” is used to justify accelerated deployment, often in contradiction to the safety and reliability standards that govern other high-risk technologies.26

We’re already operating outside a true free-market dynamic. AI firms face formidable barriers to entry in compute costs and talent, concentrating power among a handful of well-funded companies. These firms are increasingly integrating with military and surveillance systems: Google, OpenAI, Anthropic, and xAI each received $200 million Pentagon contracts in 2025, after removing prohibitions on military use.27 When companies can use one model to train the next through synthetic data, compounding advantages risk locking in dominance at the level of raw compute itself, with leading firms projecting compute advantages growing 3.4x annually.28

Handing tools of this magnitude to authoritarian governments or unaccountable institutions is a real and present danger for free societies. Global coordination and regulation aren’t luxuries here; they’re necessities.

Resilience beats efficiency

I personally love using AI. I’m confident it will be the most consequential technology of our generation. Which is precisely why the choices we make now matter more than the hypotheticals we argue about later.

Alignment with artificial intelligence matters for the long-term survival of our species. But alignment with ourselves—our economic structures, our institutions, our energy systems, and our cognitive habits—is the prerequisite. A society that has outsourced its thinking, collapsed its economic diversity, strained its power grid, and centralized its sources of truth won’t be capable of responding wisely to any future risk, AI-related or otherwise.

The climate math is starkly simple: without intervention, AI data centers will offset nearly every electric vehicle sold on the planet, driving U.S. electricity emissions up 56% by 2035. Your power bills are already rising to subsidize this bet. But community resistance is working—$64 billion in data centers blocked in the last year alone, mostly in places that voted for Trump by 20+ points. Infrastructure decisions are still being made in public view, which means we still have leverage.

Resilience has always looked inefficient in the short term. Diverse economies, redundant institutions, human expertise, and slower decision-making feel wasteful. Right up until they’re the only things standing between stability and collapse. Hyper-optimized systems perform beautifully under ideal conditions and fail catastrophically when those conditions change.

We are still early. Public opinion still matters. Policy still matters. We haven’t handed over the keys yet, but we’re close.

If you’re concerned:

Call or email your state representative (U.S. readers). That’s where data center approvals happen and where you have the most leverage

Be intentional about your own AI use. These tools are powerful, but every query has a cost, and not all AI companies are created equal. Use them when they genuinely help, not out of habit. When possible, choose companies with stronger transparency and ethical commitments.

Support human creators. Subscribe to journalism, buy art directly, cite original sources

Demand transparency. Companies should disclose energy and water use for AI services

If we continue optimizing for speed, scale, and short-term profit, by the time we figure out how to align AI, we’ll have already misaligned ourselves to the world it’s shaping. If we stay on our current trajectory, that world will be faster and shallower, built on the backs of the poorest among us, with power even more centralized in the hands of the few.

The question isn’t whether AI transforms everything. It’s whether we shape that transformation, or whether we let a handful of companies do it for us.

AI use disclosure: This piece was edited using Claude, the thumbnail art was created with Midjourney, and the Heatmap chart was generated with Gemini.

"Deepfake Statistics 2025: The Rise of AI-Generated Deception," DeepStrike.io, 2025. https://deepstrike.io/blog/deepfake-statistics-2025

"Why detecting dangerous AI is key to keeping trust alive," World Economic Forum, July 2025. https://www.weforum.org/stories/2025/07/why-detecting-dangerous-ai-is-key-to-keeping-trust-alive/

Cory Doctorow, "Potemkin AI," Pluralistic, January 21, 2023. https://pluralistic.net/2023/01/21/potemkin-ai/

"What we can learn from Martin Luther about today's technological disruption," World Economic Forum, October 2017. https://www.weforum.org/stories/2017/10/what-we-can-learn-from-martin-luther-about-todays-technological-disruption/

“Why the U.S. economy and S&P 500 are diverging,” J.P. Morgan Private Bank, 2025. https://privatebank.jpmorgan.com/apac/en/insights/markets-and-investing/tmt/why-the-us-economy-and-sp-500-are-diverging

"Overvalued 500: Risks of Tech-Driven Bubble," AIInvest, 2026. https://www.ainvest.com/news/overvalued-500-risks-tech-driven-bubble-2601/

“SignalFire State of Talent Report 2025,” SignalFire, 2025. https://www.signalfire.com/blog/signalfire-state-of-talent-report-2025

"Unemployment Rate - 16 to 24 years," Federal Reserve Economic Data (FRED), St. Louis Fed. https://fred.stlouisfed.org/series/LNS14024887

“OpenAI president Greg Brockman donated $25 million to a Trump super PAC,” The Verge, 2026. https://www.theverge.com/ai-artificial-intelligence/867947/openai-president-greg-brockman-trump-super-pac

Edmund Lee and Benjamin Mullin, “Amazon’s Deal for Melania Trump Film Draws Criticism,” The New York Times, January 28, 2026. https://www.nytimes.com/2026/01/28/business/media/amazon-melania-trump-film-critics.html

"Google's AI Overviews Are Undercutting Online Publishers," NPR, July 31, 2025. https://www.npr.org/2025/07/31/nx-s1-5484118/google-ai-overview-online-publishers

"Generalization bias in large language model-generated summaries of biomedical research," Royal Society Open Science, April 2025. https://royalsocietypublishing.org/rsos/article/12/4/241776/235656/Generalization-bias-in-large-language-model

“AI research summaries exaggerate findings,” Inside Higher Ed, April 24, 2025. https://www.insidehighered.com/news/tech-innovation/artificial-intelligence/2025/04/24/ai-research-summaries-exaggerate-findings

“Artists file class action lawsuits against AI image generators,” Associated Press, January 2023. https://apnews.com/article/artists-ai-image-generators-stable-diffusion-midjourney-7ebcb6e6ddca3f165a3065c70ce85904

“To Think or Not to Think: The Impact of AI on Critical Thinking Skills,” National Science Teaching Association (NSTA), 2025. https://www.nsta.org/blog/think-or-not-think-impact-ai-critical-thinking-skills

“Q&A: Fact-checking historian Lucas Graves weighs in on Meta’s decision to shut down its global fact-checking program,” Columbia Journalism Review, 2025. https://www.cjr.org/tow_center/qa-fact-checking-historian-lucas-graves-weighs-in-on-metas-decision-to-shut-down-its-global-fact-checking-program.php

“Data Center Energy Needs Are Upending Power Grids and Threatening the Climate,” Environmental and Energy Study Institute (EESI), 2025. https://www.eesi.org/articles/view/data-center-energy-needs-are-upending-power-grids-and-threatening-the-climate

“What we know about energy use at U.S. data centers amid the AI boom,” Pew Research Center, October 24, 2025. https://www.pewresearch.org/short-reads/2025/10/24/what-we-know-about-energy-use-at-us-data-centers-amid-the-ai-boom/

“Data center growth could increase electricity bills,” Carnegie Mellon University, 2025. https://www.cmu.edu/work-that-matters/energy-innovation/data-center-growth-could-increase-electricity-bills

“Bipartisan local backlash to data centers halts $64B in development,” The Hill, 2025. https://thehill.com/policy/technology/5605667-data-center-criticism-study/

“Google backs down from proposed data center after months of community pushback,” WFYI Indianapolis, September 23, 2025. https://www.wfyi.org/news/articles/indianapolis-council-google-data-center-vote-withdrawl

“Georgia becomes ‘ground zero’ for US fight to ban datacenters,” The Guardian, January 26, 2026. https://www.theguardian.com/technology/2026/jan/26/georgia-datacenters-ai-ban

“Local Opposition to Data Centers Is Surging. So Are Canceled Projects,” Heatmap News, January 2026. https://heatmap.news/politics/data-center-cancellations-2025

“California SB 1047 and the Future of AI Safety Regulation,” Carnegie Endowment for International Peace, September 2024. https://carnegieendowment.org/posts/2024/09/california-sb1047-ai-safety-regulation

“OpenAI’s new safety framework allows ‘critical risk’ AI if rivals do it first,” Fortune, April 16, 2025. https://fortune.com/2025/04/16/openai-safety-framework-manipulation-deception-critical-risk/

“A Race to Extinction: How Great Power Competition is Making Artificial Intelligence Existentially Dangerous,” Harvard International Review, September 2023. https://hir.harvard.edu/a-race-to-extinction-how-great-power-competition-is-making-artificial-intelligence-existentially-dangerous/

“Scale AI announces multimillion-dollar defense, military deal,” CNBC, March 5, 2025. https://www.cnbc.com/2025/03/05/scale-ai-announces-multimillion-dollar-defense-military-deal.html

"AI 2027 Compute Forecast," AI-2027 Research, 2025. https://ai-2027.com/research/compute-forecast

Wow I needed this. I’ve been sitting much more with the future problems that AI will cause — more specifically how it’ll destroy meaning-making for us when it collapses our current ‘jobs = purpose’ worldview. But because my belief is that humans must go through the whole pain cycle to get to wisdom, it’s become a blind spot — I’ve been more concerned with the story of our future than what’s actually happening now. This was so clear and informative and even offers some concrete steps (and I appreciate the complete lack of chatGPT syntax as well!!)